I fully believe we can deliver faster projects, with better code, exposing less risk to the company and in the end be more profitable if team members

The world and businesses worked for thousands of years, even decades since computers was invented before agile methodologies took over how projects and companies was run. Projects was delivered and companies didn’t go bust.

Workable products got shipped before testing was done thoroughly, before unit testing was common and TDD was even heard of.

At the same time many teams worked well, developers wrote good code, meeting deadlines and shipping products before pair programming was a thing.

Sort of..

Were we also fine before cars replaced horses, computers replaced pen and paper, modern medicine replaced witch doctors?

Before Agile projects was delivered often late, not meeting the updated requirements, and all the other benefits with Agile. But some was delivered on time with features as desired and to budget. Just fewer and a lot slower.

And products were shipped with many bugs over and over again, with costly fixes over and over again.

Why not pair?

Double the resource cost

Many companies and managers can not see past the issue of using two people on one problem. Basically doubling the resourcing cost per story/task/whatever.

And some (not many) are aware of the teachings of the

Mythical Man Month that adding more resources to a problem is not a linear reduction in time to finish the problem.

My space - my own thing

Many people and developers are also not interested in working with others on a task, especially all the time. They feel their private space violated and interfering with their work habits. I/they/most people do like to think and experiment and solve a problem on their own. And be trusted to do so.

Being forced to sit and watch, to talk to another person about every step of solving a problem is a big change in work habits and social skill requirements.

So why pair program?

3 reasons: Code Quality, Knowledge Share and Feature Speed.

Code quality

Mini plan

If two people sit together to work on a task they are quite likely to discuss the issue and basically perform a mini up-front architecture analysis of the problem. They might even apply TDD approach to solving it. In essence it should deliver a slightly more thought out solution than simply jumping in the deep end and hacking out a solution.

Human rubber duck

Explaining the problem and solution to a human

rubber duck also unveils the root cause and the better solution much quicker.

Less rabbit holes

Two people are less likely though not guaranteed to go down a completely wrong rabbit hole in solving the problem. And at least quicker to realise that they are and pull out out sooner.

No short cuts

If you sit with someone you are less likely to cut corners and take short cuts in your approach and implementation. This is a very good reason for why pair programming results in better code quality. You are for example less likely to skip writing tests if someone sits with you.

Less mistakes

Simple mistakes and syntax errors are quickly spotted and corrected. Human compiler and typo spotter is often what happens but that is not really a bad thing, just don’t be offended by it.

Clean readable code

By having two people agree on the implementation then the readability and approach to the code should be cleaner and more maintainable as you both have to agree and understand the delivered implementation. This basically negates the need for extensive code review process later on as covered in my "

Pair with people you like and code reviews with people you don't like" blog post

Ninja code

A lone developer often so called ninjas may also try to write smart code. Code that none else can understand nor maintain when that person leaves. A pair would

prevent writing code that is unnecessarily smart. And share understanding of elegant code that is actually useful.

In the end per finished feature the code is on average more likely to be of higher quality, with more tests, more maintainable and properly solves the initial problem.

Knowledge share

One obvious benefit of pair programming is that now two people know everything about that task. And two or more now know about the product / system that the task involves.

No single point of failure

This reduces project, product and company risks and costs a lot. If one person goes on holiday or is sick there is no stoppage in working on that task or using that system as the other pair half can continue on his own.

And worse if a person leaves the company or is hit by a bus there is no panic as multiple people know about that system or feature. Ideally if a person is off for longer period or permanently a new pair is formed quickly to remove the new temporary single point of failure threat.

Best practices and showing scars

Another good knowledge share benefit of pairing up is that the two people start to share best practices in approaches to implementation or even develop new ones. They share stories of previous scars of bad practices. This should make them both better developers.

Rotate pairs - spread knowledge

If you also continuously or with some frequency rotate the members of each pair you gain even more knowledge share.

Product and system knowledge is now shared across the entire team. The risk of loosing knowledge is reduced further.

Best practices are spread around the team. Members pick up new skills and enjoy sharing their experiences. The knowledge and quality increases, and team morale should also increase.

Feature speed

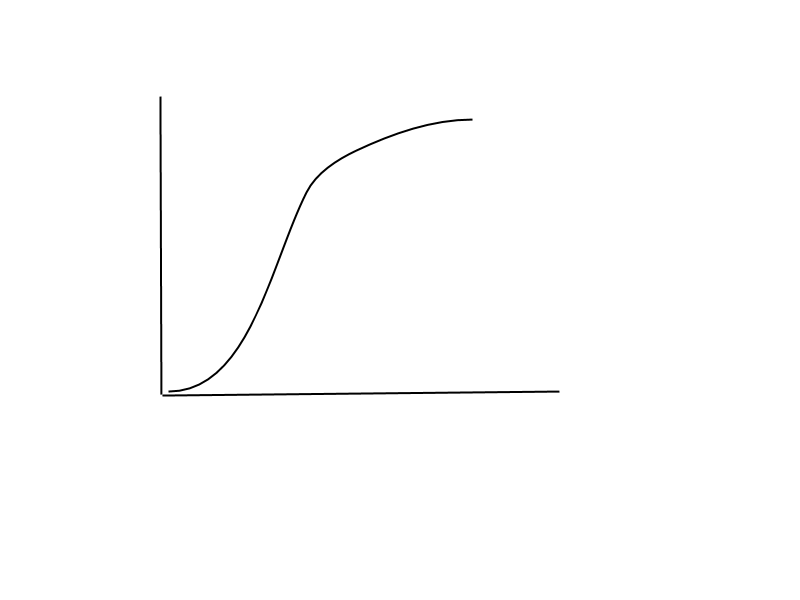

Initially the feature tasks and project speed may be slower if your team members pair program. In a mathematical sense it could be halved as it ties up two members per task. And the benefits of pair programming are often more longer term.

But some implementations will go quicker as less likely to waste time on the wrong rabbit holes etc.

Speed per feature != initial implementation

In the longer term a great increase in benefit is visible. If you count bugs, planning, etc as part of the actual total feature speed, a pair programmed feature is much more likely to be of higher quality and less likely to come back over and over again with bugs. And it is bug fixes that take time.

The original implementation is often a low fraction of the total effort and time cost per feature. As Olaf describes in his "

Pair programming Economics" post, implementation is perhaps only 10% of the time spent on a task.

Clean code

In addition with good clean implementation the code is more maintainable making future extension and fixes much easier and quicker.

Less procrastination

There will also be less procrastination with dealing with issues, with talking to other people, etc as two people should drive each other on to finish a task.

Less wasted time

When working on your own you may often spend a frustrating long time trying to figure out what is the actual problem, and how and where you should solve it. Sharing that with another person often cuts that waste down as it is quite likely one knows where to look already.

Quicker ramp up and less resource bottlenecks

If knowledge of a system of feature is already known by several team members if not all then starting a new related task can be ramped up quickly compared to starting on a feature or system you have never touched before. Shared knowledge also means there is no virtual bottleneck waiting for a person to be available to work on that issue if he is the only one that knows about it.

Not religious but no tricks

Fake agile

Many companies and teams say they are Agile but in reality they are not. “Being Agile” is not a black and white thing, it is a grey scale from non agile black to an unreachable white. Most projects are unfortunately quick dark grey even if they think and say they are Agile. Simply just using Atlassian’s Jira, having a daily standup, even some sort of iteration does not make you Agile. It is a lot more of fully adopting the practices and continuously try to come more agile.

Fake pair programming

The same goes with pair programming. Many say they do some sort of pair programming. Simple occasionally sitting together for a little while is not enough when most of the time they work by themselves.

You need to commit to pair programming being the default convention for every tasks, for everyone. Set adaptable conventions on how to organise pairing. And when exceptions are acceptable, not the other way round.

Shared responsibility

Agile processes means not delegating and assigning tasks to individuals, but in the end team members pull tasks from the queue and make themselves responsible for that tasks. If they pair program that assignment is for both in the pair not one person. If it goes well, both get praised, if there is a problem both stand responsible. Similar to how the team shares praise and criticism.

Personal space and time

Another important step is to allow for personal space and time away from the other half of the pair. I don’t believe 100% pairing from 9am to 5pm is a good thing, no need to be in each others arm pits all day. That would cause friction and stress on the team. There is no need to be religious about it. Its a convention not a rule.

Let people take breaks from each other. Much as Pomodoro Technique allows frequent breaks from work, pairs should take breaks from each other.

They could spend that time on researching the tasks, perform mini hacky experiments. Watching another person google around for an hour is not that productive nor fun. Allowing people to catch up with IM chats and emails, make personal calls etc will allow for a more happy and productive pair when they pair up again.

Non pair tasks

Some tasks does not need to be paired up for. Simple research, monitoring, tiny typo fixes can be done by just one person. Having a nice balance of the majority of the time is spent on important paired tasks with a few smaller non paired tasks is probably a good idea.

Effective onboarding

Taking on new team members, recruiting junior members still have a great benefit from pair programming. Sarah Mei write a great article on the benefits of "

Pairing with Junior Developers". Leaving new team members to plod along fixing bugs is definitely the wrong way to onboard them.

Who to pair

Probably out of scope and can cover an entire blog post but a tip is to mix pairs. Senior with juniors. Front end with back end focused skills. Testers with developers if appropriate. And also seniors with seniors.

Remote challenge

If the team is distributed pair programming does become a challenge. However it can still be achieved. Pragmatic Bookshelf have a great book on “

Remote pairing” by Joe Kutner. And a lot of

tools and advice are available.

Not for everyone but for most

As I have shown here, I believe the pair programming is essential in a modern project. Sure, not pairing has worked for a long time and will still work. And for some specific teams and people it is still fine not to pair.

But pairing works better with most teams. It reduces risk, it builds team culture, increases actual velocity, makes the team enjoy their work and in the end deliver much better products.

Just approach pair programming with some common sense. It is not a factory, but don’t trick yourself into a halfway house either.